Function Module Details

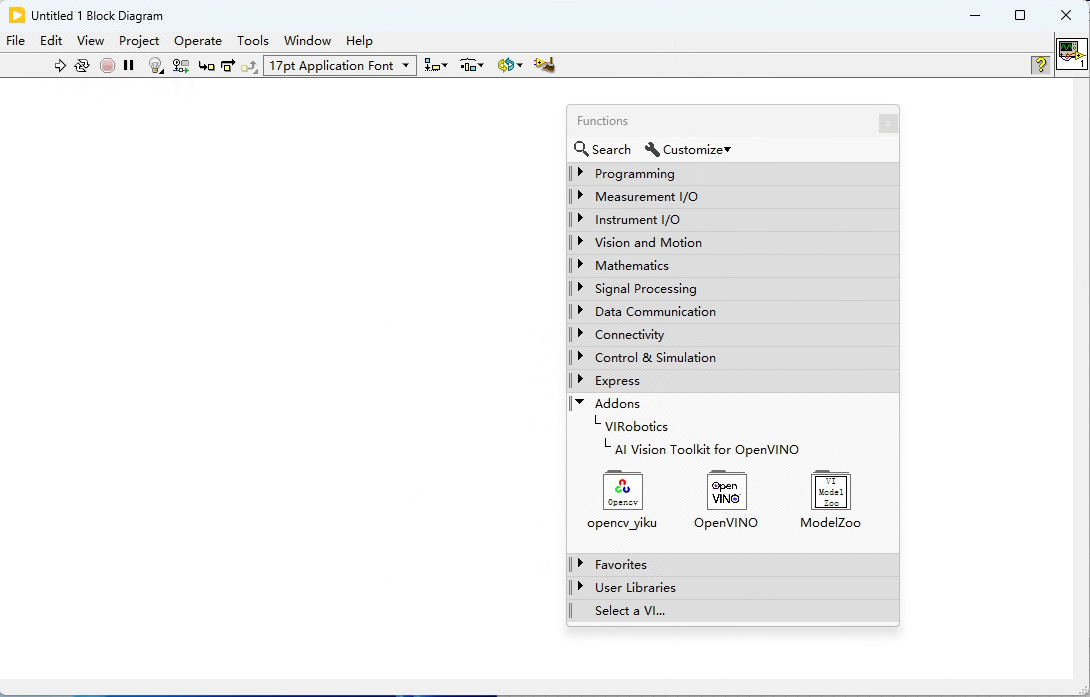

AIVT-OV The kit is in LabVIEW The function panel path for is as follows: Block Diagram>>Functions>>Addons>>VIRobotics>>AI Vision Toolkit for OpenVINO

You will see the following three main modules:

opencv_yikuOpenVINOModelZoo

Here is a brief introduction to the three modules:

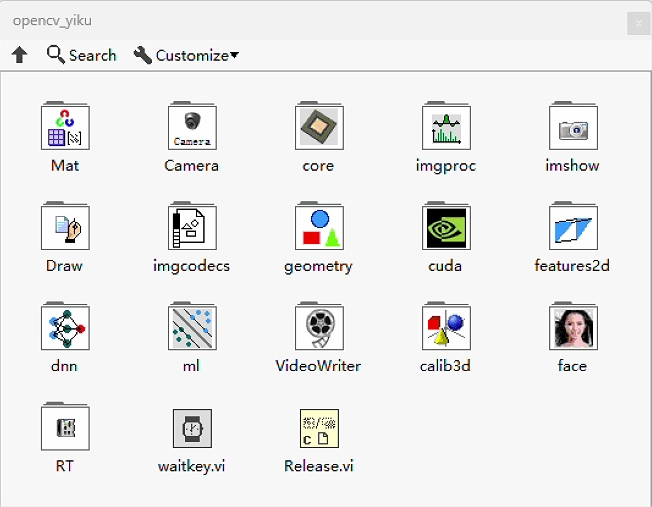

🔷 Module 1:opencv_yiku

Provide OpenCV Image processing functions, camera capture, video input and output, traditional visual analysis, deep learning preprocessing and other functions.

| Submodule | Function Description |

|---|---|

Mat | Matrix Data Type Definition and Basic Operations |

Camera | USB, network, industrial camera capture, video reading |

core | Some basic processing of images |

imgproc | Image color space, histogram, thresholding, contour, edge detection, filtering, mapping, Hough detection, corner detection, etc. |

imshow | Image display control (OpenCV window) |

Draw | Draw rectangles, lines, text, and other image annotation operations |

imgcodes | Read, Write and Codec of Image File |

geometry | Graphics geometry processing, point set, rectangle, contour processing |

cuda | for management Information and switching of GPU(CUDA) environment |

features2d | 2D Feature description, extraction and matching of image feature points, for image registration, object recognition, image stitching and other tasks. |

dnn | Call OpenCV-DNN Module to carry out AI Inference |

ml | Support for basic machine learning models (SVMs, etc.) |

VideoWriter | Image frame writing to video file |

calib3d | Camera calibration, hand-eye calibration, 3D reconstruction |

face | face detection and recognition |

RT | RT System Path |

✅ Applicable to all scenes of pre-processing, traditional vision, visual calibration and auxiliary AI input.

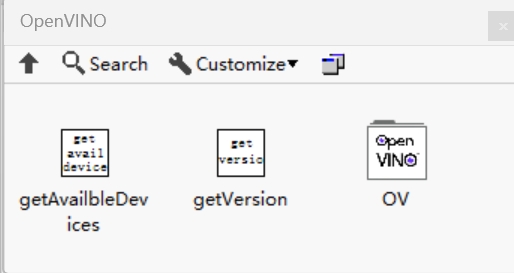

🔷 Module 2:OpenVINO

Provide ONNX, IR, Paddle and other model loading and acceleration inference functions, supporting multi-device inference (CPU/iGPU/dGPU)

| Submodule | Function Description |

|---|---|

getAvailableDevices | Gets the natively supported OpenVINO inference Device List |

getVersion | Display OpenVINO runtime Current Version |

OV | Call OpenVINO The deep learning model supports loading. IR, ONNX model, and execute inference, get results, set input and output, etc. |

⚠️ Recommendations for use: - Model path is recommended to use English path, no spaces or Chinese;

Broad model support: Integrated OpenVINO™The deep learning inference engine function of supports IR, onnx, and paddle models generated inference mainstream frameworks such as PyTorch, TensorFlow, ONNX, and PaddlePaddle;

Multiple hardware accelerationsLeverage the AI high-performance inference of Intel CPUs, integrated graphics and discrete graphics to provide high-performance, low-latency visual feedback for industrial and commercial applications;

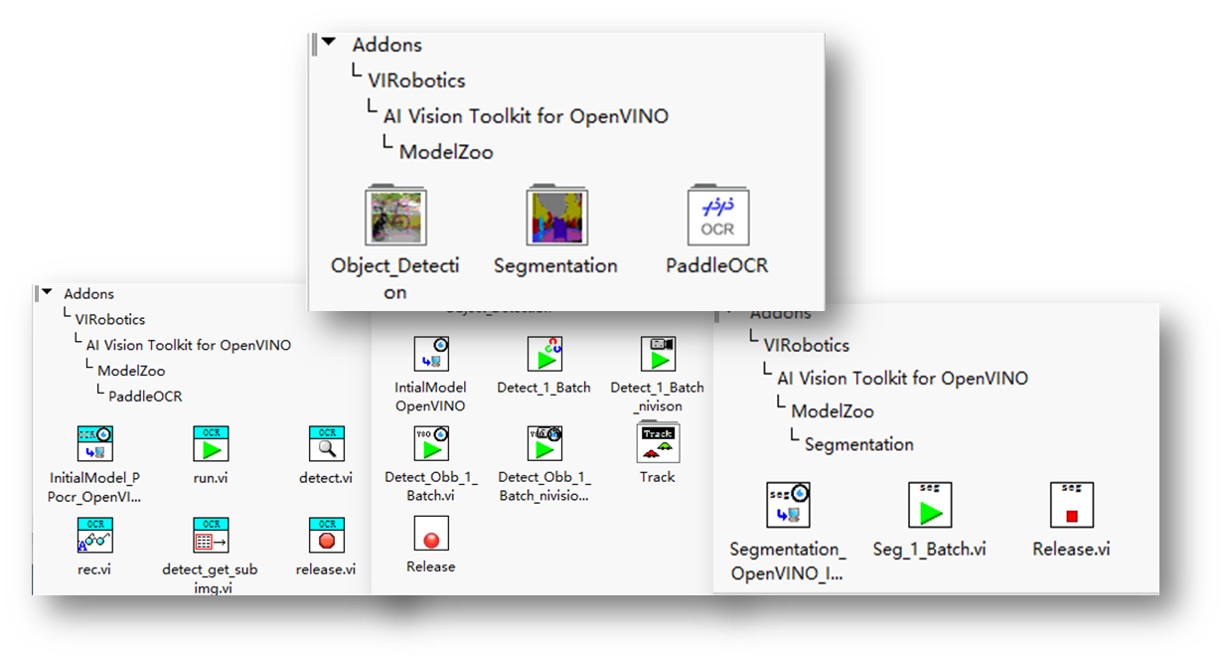

🔷 Module 3:ModelZoo

Built-in common task model inference encapsulation, including YOLO, DeeplabV3/V3, SAM, PaddleOCR It is suitable for quickly building a complete process.

| Submodule | Function Description |

|---|---|

Object_Detection | YOLOv5 ~ YOLOv13, RT-DETR Fast inference and automatic tracking of other models |

Segmentation | Semantic segmentation model calls (e. g. DeepLabV3/3, Unet, SAM) fast implementation |

PaddleOCR | text detection + Text recognition integration process |

🧩 Advantages: - High encapsulation: each task only needs to call 1~2 One VI can be completed; - Strong scalability: support model replacement, custom category, running parameter setting; - Supports multiple input modes of video streams, image sets, and real-time cameras, and provides input of Mat and nivision data types.

🧠 Use recommendations

| Task Type | recommend module |

|---|---|

| Image preprocessing, image acquisition | ✅ opencv_yiku |

| Load your own ONNX/IR Model | ✅ OpenVINO |

| Quickly build complete AI System | ✅ ModelZoo |

| Teaching demonstration, beginner's introduction | ✅ ModelZoo Sample Matching |

🔍 Deep reading

If you need to view the input and output parameters, type descriptions, and usage of each module function in detail, please refer to the Help document of the built-in function of the toolkit: